Home

Microsoft Translator now lets you translate in-person conversations

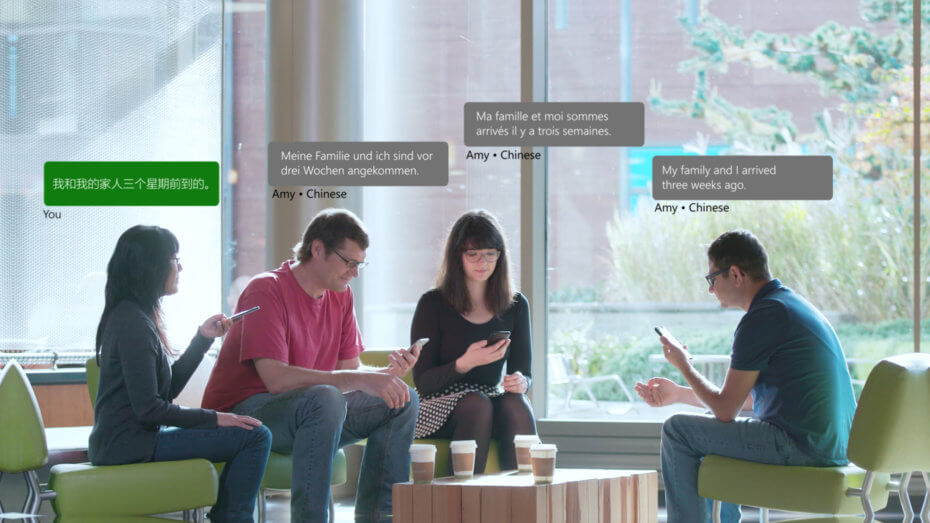

Microsoft announces an update to its Microsoft Translator app that makes it possible to see live translations of the things people say nearby during in-person conversations. The idea is that everyone participating in the conversation uses the app and gets to see translated words on their devices. And for some languages, devices can even provide spoken translations.

To use the feature, you open the app — available on Amazon, Android, iOS, and Windows — or the new website for the feature and select your language. The app spits out a code and a QR code, which other people can scan using the Microsoft Translator app. Then everyone can start using the app together.

“The speaker presses the keyboard space bar or an on-screen button in walkie-talkie-like fashion when talking. Seconds later, translated text of their spoken words appears on the screen of the other participants’ devices — in their native languages,” Microsoft’s John Roach wrote in a blog post.

This is an interesting enhancement to the already powerful Microsoft Translator app, which lets you translate spoken and written text, as well as text found in images in existing images or photos you take with the camera on your device. That said, the app has previously made it possible for two people to carry on a spoken conversation in different languages.

The new feature is more powerful because more than two people can use it, across multiple devices.

Like Skype Translator, which offers real-time translations during Skype calls, Microsoft Translator’s new in-person capability relies on deep learning, which involves training artificial neural nets on lots of data, such as voice snippets, and then getting the neural nets to make inferences about new data.

Google has started using neural machine translation in Google Translate. But Google currently doesn’t offer anything like what’s new in Microsoft Translator.

Recent Comments